ARM64架构Linux内核启动过程分析_上

ARM64架构Linux内核启动过程分析(上)

- Linux内核版本:5.10.90

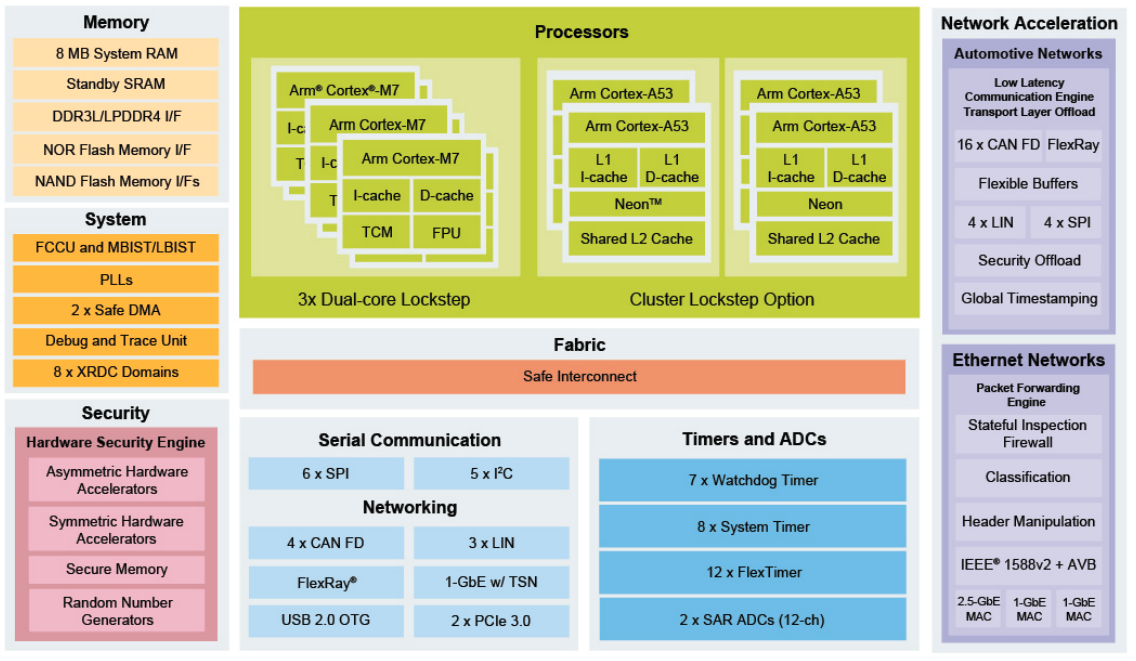

- 硬件:NXP S32G-VNP-RDB2 (4 * A53,ARM64)

1. ROM code

从外部设备(串口、网络、NAND flash、USB磁盘设备或者其他磁盘设备)加载 Linux bootloader。

2. BootLoader

- 初始化系统中的RAM并将RAM的信息告知kernel

- 准备好device tree blob的信息并将dtb的首地址告知kernel

- 解压内核(可选)

- 加载Linux内核,将控制权转交给内核

在跳入内核之前,必须满足以下条件:

- 停止所有支持 DMA 的设备,这样内存就不会被占用被伪造的网络数据包或磁盘数据损坏。

- 主 CPU 通用寄存器设置

x0 = 系统 RAM 中设备树 blob (dtb) 的物理地址。

x1 = 0(留作将来使用)

x2 = 0(留作将来使用)

x3 = 0(留作将来使用) - CPU模式

所有形式的中断都必须在 PSTATE.DAIF (Debug, SError, IRQ 和 FIQ)。

CPU 必须处于 EL2 中(推荐以便访问虚拟化扩展)或非安全 EL1。 - 缓存、MMU

MMU 必须关闭。

指令缓存可能打开或关闭。

1 | |

3. Linux内核启动

3.1 Linux内核从哪里启动

Linux内核的image有以下几种形式,目前开发板使用未压缩的image。

- vmlinux:原始内核文件,可引导的、未压缩、可压缩的内核镜像,ELF格式

- image:未压缩,调用 objcopy -o binary生成原始二进制文件,本质是将符号与重定位信息舍弃,只剩二进制数据。

- zImage:压缩,二进制文件+gzip压缩

欲知Linux内核的startup entry, 最简单的办法是网上查看相关文章,而且启动点有Kernel startup entry point的注释,可以通过搜索找到head.S文件。

本文尝试通过反汇编 vmlinux,查找Linux内核运行的第一条指令,然后通过第一条指令找到Linux内核启动点。

3.1.1 反汇编vmlinux查找Linux内核启动点

首先查看 vmlinux ELF header,可知 入口地址为 0xffffffc010000000

ps:

入口地址在哪里设置?

offset(TEXT_OFFSET),偏移 256MB

1 | |

反编译 vmlinux。

1 | |

1 | |

从 vmlinux.S可知,内核启动的第一条指令是:

1 | |

对应的section为:.head.text_text,,同时还能看到 arch/arm64/kernel/head.o, head.o的源文件为head.S。

由此我们可知,程序从arch/arm64/kernel/head.S处开始运行,且第一条指令为 b primary_entry

查看

System.map也可以知道启动点ffffffc010000000 t _head

ffffffc010000000 T _text

1 | |

注意,我们确实没有设置CONFIG_EFI。

1 | |

3.2 第一阶段(汇编语言)

3.2.1 primary_entry

1 | |

3.2.2 preserve_boot_args

功能:保存从bootloader传递过来的x0 ~ x3寄存器这四个寄存器

ARM64 boot protocol对这4个寄存器严格限制。x0保存dtb物理地址,x1~x3 = 0。x0是boot_args这段内存的首地址,X1是末地址。后续setup_arch函数会访问boot_args,并进行校验。

使用DMB来保证stp指令在dc ivac指令之前执行完成

将boot_args变量对应的cache line进行清除并设置无效

关于 __inval_dcache_area,可参考:/arch/arm64/mm/cache.S

Ensure that any D-cache lines for the interval [kaddr, kaddr+size)are invalidated. Any partial lines at the ends of the interval are also cleaned to PoC to prevent data loss

1 | |

3.2.3 el2_setup

若处于EL2模式,需要将CPU退回EL1

1 | |

3.2.4 set_cpu_boot_mode_flag

设置全局变量__boot_cpu_mode,前提是CPU退回EL1模式

1 | |

1 | |

3.2.5 __create_page_tables

页表初始化

- identity mapping

- 建立整个内核(从KERNEL_START到KERNEL_END)的一致性mapping,将物理地址所在的虚拟地址段mapping到物理地址

- 一致性映射可以保证在在 打开MMU 那一点附近的程序代码可以平滑切换

https://stackoverflow.com/questions/16688540/page-table-in-linux-kernel-space-during-boot/27266309#27266309

1)在使能 mmu 之前,CPU 产生的地址都是物理地址,使能 mmu 之后,产生的都是虚拟地址。

2)CPU 是 pipeline 工作的,在执行当前指令时,CPU 很可能已经做了一个动作,就是产生了下一条指令的地址(也就是计算出来了下一条指令在那里)。如果是在 mmu 打开之前,那这个地址就是物理地址。

因此,假设当前指令就是在打开 mmu,那么,在执行打开 mmu 这条指令时,CPU 已经产生了一个地址(下一条指令的地址),如上 2) 所讲,此时这个地址是物理地址。那么 打开mmu这条指令执行完毕,mmu 生效后,CPU会把刚才产生的物理地址当成虚拟地址,去 mmu 表中查找对应的物理地址

- Map the kernel image

- 仅从系统内存起始物理地址开始的一小段内存mapping

虚拟地址总线宽度最大可设置52,(36 39 42 47 48 52)

1 | |

3.2.6 __cpu_setup

CPU初始化设置

- cache和TLB的处理

- 清空

- 设置TCR_EL1、SCTLR_EL1

- kernel space和user space使用不同的页表,因此有两个Translation Table Base Registers,形成两套地址翻译系统,TCR_EL1寄存器主要用来控制这两套地址翻译系统

- SCTLR_EL1是一个对整个系统(包括memory system)进行控制的寄存器

- CPU做好MMU打开的准备

1 | |

3.2.7 __primary_switch

1 | |

3.2.8 __enable_mmu

开启MMU

1 | |

3.2.9 __primary_switched

C环境准备

1 | |

3.2.10 start_kernel

汇编阶段结束,第二阶段开始,第二阶段开发语言为C语言。

3.3 第一阶段总结

TODO

参考文档

- Linux内核启动流程-博客园

- Linux内核启动流程-基于ARM64 (推荐阅读)

- Linux内核4.14版本:ARM64的内核启动过程(一)——start_kernel之前

- Linux内核4.14版本:ARM64的内核启动过程(二)——start_kernel

- Linux内核镜像文件格式与生成过程

- ARMv8,某台湾高校教师做的wiki

- ARMv8-a架构简介

- ARM64的启动过程之(一):内核第一个脚印 (系列文章,推荐阅读)

- ARM64的启动过程之(二):创建启动阶段的页表

- ARM64的启动过程之(三):为打开MMU而进行的CPU初始化

- ARM64的启动过程之(四):打开MMU

- ARM64的启动过程之(五):UEFI

- ARM64的启动过程之(六):异常向量表的设定

- ARM-汇编指令集(总结)

- ARM汇编语言 - 简介 [三]

- arm64 架构之入栈/出栈操作 (汇编指令集,推荐阅读)

- MMU是如何完成地址翻译的?